As a follow-up about the issues I raised in a recent post comparing PSSA scores over the years and about which I made public comment at last Thursday’s Board Meeting, the District is in the process of taking a look at Math. I am told the two high school principals are collecting data, and that the District plans to share information with the Board through the Academic Standards Committee (whose meetings are public but not televised).

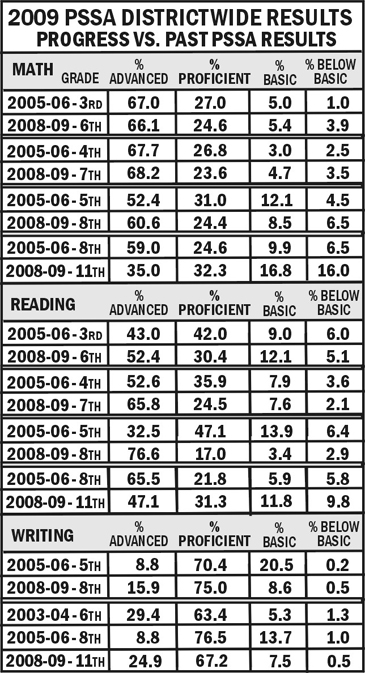

As a reminder, I took PSSA results from three years ago and compared it to results this year for three grade-levels higher (thus, comparing the same general student groups’ performances). In Reading the results over the years for proficiency were generally good, as well as in Writing. The numbers in Math, however, revealed a trend that called for some examination. At every grade level, there was an increase in the number of students performing below basic. In three of the four levels where comparison could be done, the percentage of students who scored proficient or advanced had dropped.

Another issue of concern was the drop-off from 8th grade to 11th grade performance scores in Reading and Math. Although there may be anecdotal stories of high school students not giving the PSSAs the same level of exertion as SATs since PSSAs don’t affect marks or graduation, the results in Writing did not suggest the same thing. So did 11th graders try harder in Writing while not as much for the other two subject areas, or are there other causative factors?

It is important that these numbers be used to check for trends and issues now, because the system will be undergoing change over the next few years. As I posted a few days ago, the PSSA-M tests will begin to be given to students with IEPs in Math this year and in Reading next year. This will change the dynamics of results so the same comparisons won’t be possible. It won’t mean that a problem doesn’t exist, only that PSSA results across the years won’t have the same data bases so no valid comparison will evidence those potential issues. Furthermore, the State is moving toward introducing Keystone exams in high schools in the next few years, meaning another change in the ability to compare students’ progress.

This will bear watching to see what determinations are made regarding curriculum and/or instruction as they relate to lagging test results.

Filed under: Administration, Council Rock, Data, Programs, Students, Teachers | Tagged: Administration, Curriculum, No Child Left Behind, Programs, PSSA, Students, Teachers | 7 Comments »